Who’s Watching the Watchers?

Who’s Watching the Watchers?

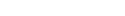

Feeling watched? Join us as Vermont Attorney General Charity Clark breaks down the new reality of digital surveillance. From school apps to AI scams, we dive into how companies collect your data and how to take control of your online privacy. The war for privacy is real—and it’s a fight you can’t afford to lose.

Episode 216

Cold Open

Beau: In this so-called post-privacy, who cares who has this or that information about us world. There are some fierce holdouts.

Charity: Violation of privacy has been so normalized that people think they’re being like a Karen. If they say, no, I don’t want that information shared about my kid,

Beau: This week we’re talking to one of them, Vermont Attorney General Charity Clerk, who’s taking on big data among other things

Charity: We allow private companies to do things we would never tolerate our government doing,

Beau: in a wide ranging conversation that goes there.

Charity: I could literally be living my life making a sandwich, doing whatever I want, but my, my evil AI bots could be romancing victims by the thousands. Extremely easy to imagine that happening.

Beau: If you lose sleep over shady crypto deals, AI overreach, and the future of privacy in America. You might wanna skip this episode.

Beau: I’m Bo Friedlander and this is what the hack the show that asks, in a world where your data is everywhere, how do you stay safe online?

Intro

Beau: Charity Clark, welcome to the pod.

Charity: Thanks for having me.

Beau: Charity Clark is Attorney General and she is a friend of the pod and she’s also doing amazing work on, on, on behalf of Vermont and through that work, the whole country.

Beau: Welcome charity.

Charity: It is so nice to be here.

Beau: It’s so nice to have you here. It’s comforting because I have a lot of questions and I of course follow, what you’re doing up there in Vermont, pretty closely. And if you want to, you can too on Instagram and Twitter and other social, platforms where, where you can stay up to date.

Start

Beau: When we talk about data privacy, it can sound a little abstract like it’s something only tech people or lawyers need to worry about, but the truth is your personal data is everywhere.

Beau: Your health records, your location, your face, your habits. Someone is collecting all of it often without your permission, and sometimes it’s the government, right? Sometimes it’s a scammer. Sometimes it’s a giant tech company selling you shampoo based on an email you wrote to your cousin. So why does it matter?

Beau: Why should the average person, someone who isn’t doing anything wrong, still care about what happens with their data?

Charity: I, I think there’s a lot of reasons, but I’m gonna focus on two big ones. The first is philosophical, and that is, do we believe that each of us has a right to privacy? Do we believe that we should be free from surveillance, free from intrusion on our financial data, our health data, all of that kind of ethos that I think is a part of America.

Charity: And that’s the first bucket. The second bucket is more practical. As an attorney, as a consumer attorney, it’s easy for me to talk about that too. And that is that our data makes us vulnerable to theft and scams. In a nutshell, protecting our data protects us from identity theft, from scams, from having extortion, data privacy is something in today’s world that is important because our data is so easily everywhere and it is so easy when you take someone’s data to use it for nefarious ends. So from a practical perspective, data privacy is also really important.

Beau: So I wanna dig in here because this year, 2025, we’ve seen the Trump administration access some of the most sensitive data the government has on us in ways I haven’t seen before. While Doge has been framed as a way to stop government spending or slow it down or get it under, you know, in control, with an eye toward easing bureaucratic overreach, uh, it has the look and feel of a big tech smash and grab.

Beau: Talk about that.

Charity: Well, I mean, I would just say generally speaking, so far the Trump administration has not proven itself to care a lot about our privacy and about data. I mean, there is one little fact, in one of the very early lawsuits that we filed, which was about Doge, in installing. Workers in positions they were not qualified for or installing Elon Musk as the head of Doge without going through the appropriate process. And the one fact that just I really think about literally lives rent free in my head is that worker who was not even Googled and then come to find out like his very public, you know, Twitter account or something, had all of these super offensive things in it that would disqualify him from holding a position like he had been hired to do.

Charity: And I just think like the average person knows, like you don’t even go on a date with someone until you Google them. Like, but you’re gonna let this person have access to all of our highly sensitive data.

Charity: It did not instill confidence in the administration, which let us not forget, had already been in place for four years previously. It’s like they should be the experts on this stuff. And they didn’t even Google. I mean, and that’s just the beginning. And I think from there we’ve repeatedly seen a disregard for the privacy of Americans.

Beau: This idea of swagger, this idea of yes, there’s rules, but they don’t apply to me because if you understood how great I am, you would understand that I’m not gonna do anything wrong.

Charity: I, oh, the swagger. I mean, some of the topics that I hope we touch on today. I, I feel like our, our cousins of this same problem, which is cryptocurrency, you don’t get how amazing cryptocurrency is ’cause you’re not as brilliant as I am. You know, instead of like, I’m just not piecing it together. And it’s like, that’s not a me thing, that’s a you thing.

Charity: And the other one is artificial intelligence. I, you know, when it comes to artificial intelligence and the risk of our data privacy being used against us being used for nefarious ends, or just generally AI being used to affect scams at a massive scale, and destabilizing the entire economy.

Charity: People don’t wanna hear it, you know, they wanna think like, well, you just don’t get it ’cause you don’t understand artificial intelligence. So same kind of swagger in that arena as well.

Beau: Okay, so you’re Ag of Vermont and I then therefore will just ask you point blank legal or not legal, to have your own cryptocurrency that people around the world can buy. And, and it, and it directly benefits the, the president of the United States.

Charity: Without being an expert on, uh, federal ethics laws, I think anyone can see. You don’t need to be an expert to see the potential for corruption in that scheme. And the cryptocurrency, the, the, the meme coins, like all of these seem very, problematic to me. I mean, there’s a reason why cryptocurrency is most used right now, as far as I can tell for three things, speculators are trying to get in early, so they can sell later. Number two, scams, right? Probably over half of the top 10 scams where people really got scammed and large quantities of money involve cryptocurrency.

Charity: And the third is buying stuff on the dark web. You know, that’s where cryptocurrency comes into viewpoint. I don’t know where else people are using their cryptocurrency for. And so I just think, what, what, how are we even here with the president being such a champion of cryptocurrency and then he himself and his family benefiting from cryptocurrency, um, it, it really boggles the mind.

Beau: Wait, but are you talking about the same person who started Trump University, which was I think you are.

Charity: Don’t forget Trump stakes.

Beau: Trump stakes. You know, there’s, and there is a skill to selling the sizzle, uh, without no protein. Um, now I’m, I’m sorry, this is supposed to be non-partisan. I’m doing a wonderful job so far. Um, the, the, the, the crypto thing aside, like, obviously, uh, what I’m hearing from you is that there, it’s more of an ethics issue than it is an emoluments issue. In other words, like I, I understand that, that all of you folks that have the title, attorney General, um, at least on the democrat side of things are friends. You know, you, you know each other. And so is there any talk about like, you know, telling the, the, the administration that it’s not okay in, in a legal, uh, arena.

Charity: Well, I’ll, I’ll, I’ll, I’ll let you in on our process. We meet very regularly. You’re right. All the ags, who are Democrats at least are friends. I mean, I’m friends with some of the Republicans too. Some of them are great, but in addition to that, our staffs are all working together and in conversation with each other.

Charity: So they are looking at all kinds of issues, whether it be through executive orders or other problems that have arisen this administration so far. Which have been unfortunately numerous. And we’re analyzing largely whether federal law has been violated or the constitution has been violated. And whether our states have standing, standing as a refresher means that you are a party who can bring a lawsuit.

Charity: So if someone ran over my foot, bo, you probably would not be able to sue. Um, on that issue. It would be me. I would be the one who would have standing. Right. So you, your state has to be someone with standing and if those, uh, especially the constitution, you know, when I literally sworn oath to uphold the Constitution of the United States of America, that’s right in the oath.

Charity: And I am gonna do it every time. It’s taken up a lot of my time these past six months. But, but Right. That’s what we’re looking for when we are looking about whether we should join a lawsuit.

Beau: An unfettered marketplace of entrepreneurs doesn’t seem like a great match for the ethical complexities we face when it comes to artificial intelligence among other things. We’re gonna take a quick break and when we come back we’re gonna talk about what states like Vermont can do when tech moves faster than the law.

C Break

Beau: The process you described, the legal groundwork, the need for standing, constitutional lens, all that. That feels especially important right now because some of the biggest threats we’re facing aren’t just political, they’re structural. You mentioned earlier that AI is one of the issues that keeps you up at night.

Beau: Me too.

Beau: The technology is moving faster than a law. At one point this year, the budget bill included a 10 year ban on state level AI regulation. It didn’t survive, but the fact that it was even proposed says a lot. So in this legal vacuum, I think you could call it that, what should states be doing?

Charity: It’s honestly such a mess. You know, we have such a complicated moment when it comes to ai. We have corporations who are racing to be the, the, the company who kind of owns the space, the leader, and they’re willing to take risks and, and violate laws because they gotta get there. And we have leaders in Europe always when it comes to data privacy and technology because of.

Charity: Their experience, historically and politically, I think a lot can be learned about what’s going to be happening in Europe with the ai act, and certain states are leaders as well and have information. That is kind of unique to their arena. So I’ll give you an example. Obviously in Vermont we have an attorney general me who was a consumer lawyer in our consumer unit, earlier on in my career.

Charity: And so I have a very strong interest in data privacy and will always be proposing data privacy bills and ideas to the legislature as an example. One of those was to ensure that our. Revenge porn statute included ai. So if someone made a deep fake pornography with some old girlfriend’s face on it, that would be included in our revenge, revenge porn bill law.

Charity: So stuff like that. But I mean, that’s just the, the, the challenge with AI is that it can be found in so many different arenas, a comprehensive bill. Is almost hard to imagine because we’re still figuring out how AI can be applied, you know? So ideally we would have a federal law. Ideally it would be comprehensive.

Charity: The way I think Europe is leading the way on on. And then we have laws that were passed a long time ago in. You know, I think that there have been visionaries in Illinois when it comes to biometric data that I’m always very focused on biometric data and when it comes to ai, how biometric data can be used for such.

Charity: Meaningful and impactful and devastating ends. Deep fake pornography being, you know, top of mind in that regard. But who knows what’s on the horizon when it comes to things like, you know, DNA, eye scans, all of it. So I think that there, it’s, it’s really hard to know where to begin and where to end when it comes to ai.

Charity: We just have to be open to. The moment and also make sure that our legislators are educated. I think if I were, you know, if my whole job was focused on policy related to ai, my first. Starting point would be to make sure that legislators and state legislatures were educated about ai, about what it means, how it works, what are the applications, and what are the concerns and pitfalls that have been identified, you know, in other.

Charity: Countries and other states by thought leaders or even like in science fiction. I mean, sometimes it’s easier to understand in a science fiction context what the concerns might be than in a sort of boring application in the real world, you know?

Beau: Because it’s so expansive and hard to predict all the places in which AI can be implemented. ’cause it’s, it’s quite, quite a large swath of our reality right now. How about focusing on impact and saying that we need to start thinking about guardrails when the technology places a person in danger, when the technology creates, a, peril for a person, uh, place or thing. You know, and because it could be anything, but like, how about that? How about just on, what do you call that in legal terms, when there’s damages, when, when someone’s run over your foot?

Charity: Damages.

Beau: you go.

Charity: It’s literally the word. Well.

Beau: I’m, I’ve been around, you know, i’ve been around, I know words. But, um, so, so like with, with ai, let’s not focus on what it can do, but who it hurts. And then, if we do that, is that, is that a, is that an acceptable way to start framing some guardrails?

Charity: I mean, maybe I. Uh, can I, can I pause it? Another approach? What if it’s starting at the end? The damages, we start at the beginning. The philosophy. We believe in privacy. We believe people have a right to privacy. Part of the challenge with that is we have a moment in time when we have major Supreme Court decisions that were based, were based on our right to privacy.

Charity: And they could have been based on the equal protection rights, but instead they were based on our right to privacy, those involving sex, uh, those involving, , gay marriage. Abortion. You know, we have this idea of privacy that’s so important, but we also have unfortunately, a conservative wing of the Republican party who is willing to sacrifice privacy for their social ideas.

Charity: You know, and that’s what I, I have wondered if is connected where we ha it’s, it’s so we have that problem. The other problem with the philosophy is I feel like. In capitalist America, we love you, but sometimes the idea of the economic opportunity, the ability to make money, et cetera, trumps concerns about privacy.

Charity: We can’t go slow just because you want your privacy. We have to own the field. ’cause if we don’t, China will or whatever the the

Beau: Yeah. Yeah. So they, the move fast and break things mentality that Mor so, yes, I think that that is true. Like it is first and foremost, it is about, there’s a Venn diagram of like the circle that says, , we should do everything right.

Beau: And, and there is a right and a wrong way to do it. And then there’s the other circle that says that we, , need to win. And the the need to win circle is like a death star in front of a tiny iny, windy little moon of good intentions. So, we’re in that situation right where this big death star of aspirational world dominance in the field of artificial intelligence is just beating out all considerations of, of caution.

Beau: And so you have every, everything from people predicting a doomsday caused by AI that decides it doesn’t need us anymore. A la the Matrix to, to a, an AI that simply treats us all as fodder for getting better at what it does and, and that, you know, all by itself.

Beau: And, and, and so it worries me.

Beau: That our data could be used to train some, you know, and that’s, and that is the Hollywood si science fiction version of it. ’cause that’s like, I’m not saying Elon Musk did anything wrong. I’m not saying Donald Trump did anything wrong. I don’t know. I wasn’t there. What I do know is that our data, all of it ends up being sucked into this machine that is learning everything we know and learning how to tie it to what other people know to create a different version of what is known.

Charity: The other thing that’s so great about, you know, Hollywood science fiction and, and reading science fiction is that one of the things that about AI that I think is problematic is a lack of imagination among us. And artists are able to, to imagine these crazy scenarios and it does open up a creative portion of our own minds to say, wait, could this go wrong? Maybe this isn’t such a great thing.

Charity: And I think one of the things that we should be looking at is what is the purpose of ai? Is it to make our lives easier? Because we’ve been hearing for generations that the new technology is going to make our lives easier. But look around, don’t you think people are working longer hours and harder than they ever have? Are they making more money for that work per hour? You know, technology has not made our lives easier. It’s made capitalism better, it’s helped very rich people mostly men become richer. Right? So I think that’s the, another question is, you know, what is, what is the good.

Charity: I, I’m not, I don’t mean to diminish the concept that technology, if we own the field, gives us an edge and that has a f. Like national security concern. I, I think that that is valid. I think that’s a very valid perspective. If we don’t occupy the field, China will, you know, not our friend. Right. I, I don’t mean to, to diminish that, but I think that there are sort of niche concerns that I have that from my experience and professional life seems so obvious to me that people aren’t talking about, I’m gonna highlight one of them.

Charity: I see this coming a million miles away, and I hope that five years from now we’re not pulling up this podcast and be like, charity is such a Cassandra. She warned everybody. But the romance scam using AI chatbots is a deadly combination and it is coming. You know, the idea that I could literally be living my life making a sandwich, doing whatever I want, but my, my evil AI bots could be romancing victims by the thousands.

Charity: Extremely easy to imagine that happening.

Beau: Yes, it’s true. And it’s been, and it’s been happening.

Charity: It has been happening,

Beau: But you know, on the romance scam front, we have inter, we actually interviewed one of the guys charity whose face has been used in lots and lots of scams.

Charity: Like a hot man who’s been used in romance scams.

Beau: Yeah. And he’s just all over the place.

Charity: I, that’s, that’s just awful. I, oh gosh. Talk about a violation of privacy.

Beau: Exactly right. We live in a world now where you can go to a cold play concert. And just be minding your own business and pop up on the jumbotron. Not saying that you’re pure as the driven snow, you may be having an affair. But did you expect to be placed on the jumbotron and then go public like that?

Beau: Like, oh, by the way, we’re having an affair to the entire world.

Charity: I know.

Beau: and so here’s the question I have is, you know. That’s a great example of a a, the question of standing. That was the CEO of a company and the director of that company’s HR on a date. Both of them married to other people, both of their lives torn apart because their pictures were placed on a screen.

Beau: Somebody on a social media platform reposted it and other people used. Uh, AI used facial recognition, AI to identify those people and used data that was online that identified those people and said where they lived, who they were married to, where they worked. Talk to me about what we can do on the legal front to protect. The right of American citizens who want to have an affair without being discovered through social media in front of the entire universe.

Charity: This is a great example because the stakes feel very low. Like this isn’t the end of the world, you know, it’s just, you know, two, two individuals. This to me, is filed squarely in the, none of my business department and I, this comes up a lot when I talk about data privacy, but I really believe it. The Vermont motto is freedom and unity.

Charity: And my joke is that that’s translated into the modern world as love your neighbor and mind your own business. So this is in the mind your own business department. It’s none of your business what these, unless it’s your husband or your. Parent or whatever. And even then, if you’re an adult, it’s none of your business.

Charity: They get to live their lives. Right. That’s why to me, it starts with kind of like the philosophy, the preamble, the, whereas statements that begin a piece of legislation, you know, whereas mind your own business, whereas everybody has a right to be free in America, you know? And the thing about it that’s so frustrating is I think there is this.

Charity: It needs to be named. There is this dynamic where people unwittingly share of themselves online, and then a company takes that information and uses it however they want and somehow it’s the person’s fault. Will you share this picture online? Well, everyone sharing their picture online and, and Facebook, and of course Facebook has had a very spotty history with protecting our privacy.

Charity: And that’s a whole nother matter, but, so I think that there’s this. This world where we find ourselves in very far down the path of, you know, unwittingly being a partner to this change in the ethos of privacy in America. And we have a moment where we, especially with the advent of ai, where we have to say no, this is what it means to be free of, from free from surveillance.

Charity: Um. Just I, you know what I always think about, did you watch 30 Rock? I’m sure you did.

Beau: I did, yes.

Charity: And of course there’s this, all the characters are so great, but Jack Donahue is this media, you know, uh, very fancy media person in New York City. And one of the things about him that’s hilarious is he has a cookie jar collection.

Charity: Very surprising for this media mogul in New York City. And I think about that all the time because that’s the kind of thing that who cares, but it might be kind of embarrassing to him, you know, that character might feel very embarrassed about his dorky cookie jar collection. But that’s his business. And if he wants to have beef free and weird with his cookie jar collection, okay, great.

Charity: That’s none of our business. And it deserves, he deserves to have privacy over that.

Beau: So the idea that your life, your quirks, your mistakes, even your cookie jar should be. Private, I don’t, that doesn’t just apply to public figures or adults. It’s showing up in a much quieter, more everyday place uh, for children, specifically with the apps that they use to turn in homework to grades, message teachers and all that.

Beau: And if you don’t have children, you might not realize how early this kind of tracking starts. But it really, it might matter a lot. That’s after the break.

C Break

Beau: Up to this point, we’ve been talking about crypto, AI surveillance, data brokers, and the philosophical right to be left alone, you know, mind your own business.

Beau: But what happens when that debate isn’t just theoretical? When it starts showing up in permission slips, classroom maps, and seemingly gives no other choice for our children. Uh, you know, here’s the app. Use it. What happens when the battle over privacy begins before a child even understands what privacy means.

Beau: So attorney general Charity Clerk. I love saying that. What do parents need to understand about the choices they’re making often without realizing it when it comes to their kids’ data. So attorney general. Ugh. Okay. So attorney general, charity clerk. What do parents need to understand about the choices they’re making uh, when it comes to their children’s data?

Charity: So if you are a parent. It is a good time to check in with what feels right for you, because schools are not there to be monitoring this. They’re there to make, to teach children and make their life easier. So they’re using EdTech tools, EdTech products to make their lives easier.

Charity: That might not match up with your philosophy about privacy. It’s okay to opt out of that stuff, you know? It’s okay to ask for. As I have done the data privacy statements on various apps that the school is using because this next generation is gonna be really interesting. We are of an interesting generation.

Charity: I consider myself to be ex Enal. I’m a young Gen Xer, and I think that I am in a unique generation because I had the internet and email when I was in college. I. Had the, obviously the internet a lot when I was in law school, and yet I grew up with, um, in a, you know, in a free and analog world. I always reference this and I’m doing it again, but there’s this iconic in my own mind New Yorker spoof article about summer before the internet.

Charity: About the things that we did, and it’s the visual, like the illustrator just drew a person like lying upside down in a chair, blowing a bubble, and it’s like, yeah, and the whole article is so gold because it’s like, yeah, that’s what life was like. So to be in this generation to understand what it’s, what, what it was like to be truly free and, and to see now how different it is.

Charity: Teach a class at, or used to teach a class at the University of Vermont. And I remember before class, a bunch of my students were chatting about the the cool party they went to over the weekend. And the reason why the party was so cool is because everyone had to put their phones in a bowl in the entryway, and then they were like.

Charity: Free and no one was taking pictures and they were surveilled. And it just blew my mind that that was like my entire college experience, you know? So my idea of privacy is gonna be different. And I think kids who are little today are going to be so much more sophisticated than millennials about privacy, because they’re gonna have parents like me freaking out over, you know, all of the data privacy concerns that we should be having as parents today.

Beau: Now if, if you’re not familiar with the education pointed apps they are several and they have actually a storied past of being very bad on the privacy front. And there are laws, it’s one of the few places in this country where there are laws that protect the information Kapa of, of children.

Beau: And yet it is a porous environment right now. And I can tell you because I was a rather active parent, back in the day with my kids who were grown. That you can ask that the school backpack, any information you need. And they will do it. They have to, they can’t force you to be on those apps, because they, the apps are now used the way the backpacks used to be used, where they would just be given a memo and then your kid forgot to give it to you.

Beau: So you had no idea what was going on. And it’s no different now because most parents aren’t even looking at those apps. They’re just looking to see if their kids are going to, you know, doing their handing in their homework

Charity: is 100% true. Something that I’ll tell you is we passed a law in Vermont right before the pandemic, I think. And in the course of advocating for that in from the office, I learned that. The, the one of the, the weak links is parents waving their rights. It’s like. Violation of privacy has been so normalized that people think they’re being like a Karen. If they say, no, I don’t want that information shared about my kid, you know, or like me. I get the form and it’s like, what’s your kid’s social security number?

Charity: Like, why do you need it? I’m not giving it to you. I’m making it up. I’m constantly, I’m. My daughter and I just signed up for something yesterday, I can’t remember what it was. And, um, she wanted to watch a movie and we had to sign up and she was just like, what should we put as our birthday? And she just knows we make up a date.

Charity: I’m like, why am I sharing my birthday with Paramount? Plus,

Beau: That instinct to make something up. To not give the real birthday. To question why anyone needs your. Social security number or your children’s number, that’s not paranoia. That’s just a baseline defense mechanism Now, and the wild part is it’s often seen as overreacting, which is crazy. We’ve internalized this idea that resisting a privacy overreach makes you a problem when really it just makes you, uh, smart.

Beau: But here’s the thing, once that line gets crossed enough times, we stop seeing it. Right? It’s like a, it’s like when a room smells bad, like after a while, you stop smelling it, but it, it doesn’t mean it doesn’t stink.

Charity: I mean, remember when I, I remember maybe 15 or 20 years ago, the first time that I was checking my Gmail and I was getting ads on the side related to stuff in my. Emails, and I remember I was living in New York City, but of course I’m from Vermont and I’m obviously low key, obsessed with Vermont and always have been.

Charity: So even when I was in New York, I was probably talking about Vermont a lot. So I was getting a lot of like maple syrup ads and BMBs and stuff. And then I remember, um, I. Someone in my family, a young child in my family, uh, got head lice and we were all together and there was like a panic on the family, you know,

Charity: email chains around like head lice.

Charity: And I was getting ads for like head lice, like. Cream or whatever, shampoo. And I remember being so creeped out about that. And now it’s just what we expect. And in fact, it’s what we sign up for. When I go on Instagram, Instagram is selling me stuff and I buy it, you know? And I think there is a place for that.

Charity: And then I think there is a limit.

Beau: Talk to me for a second, about what is going on in the state of Vermont when it comes to privacy or the ways in which privacy or lack thereof can affect people. What’s happening in the legal front for you?

Beau: Talk to me for a second, about what is going on in the state of Vermont when it comes to privacy or the ways in which privacy or lack thereof can affect people. What’s happening in the legal front for you?

Beau: Talk to me for a second, about what is going on in the state of Vermont when it comes to privacy or the ways in which privacy or lack thereof can affect people. What’s happening in the legal front for you?

Charity: So I first advocated for a biometric privacy act, a biometric data privacy act in early 2020, like out of my own mouth to a legislative committee. That committee has taken. Years to thoughtfully approach the issue, educate itself. And in that process has, as the kids say, like radicalized itself. I mean, they literally are so informed that they have very strong opinions now about how important our data privacy is and what a law should look like and.

Charity: Ironically, it’s kind of slowed down the process because they know so much they’re not willing to compromise on certain elements. It’s really impressive. I should note that that committee is chaired by a Republican and re I mean, traditionally Republicans were like this in Vermont. They still are.

Charity: They value. Privacy. They don’t believe in giant, big government knowing all your business. So I’m not totally surprised, um, that it’s chaired by a Republican, but I have been really impressed with that committee. One of the challenges has been big tech has spent a lot of money trying to kill privacy bills or shape them in the way that they want to across the country and Vermont as a citizen legislature.

Charity: With no staff for each person doesn’t have an office, they don’t have a staff. If they’re lucky, they might get an intern, and so they rely on the good faith of lobbyists to be truthful with them. What you’ll see, especially, I think it was last year, a bill passed the house, passed the Senate and was vetoed by the governor when it went back to.

Charity: If the legislature, the Senate, failed to override the veto, and you can watch, you can get it on YouTube today on the Senate floor Senators who are good people saying things about the bill that literally just weren’t true. They were told things to be afraid of that were literally not even in the bill.

Charity: And I was watching it like tearing my hair out, like you’re worried about that? I have great news that’s not even in the bill. Like, yay, now you can vote to override. And so they failed to override the governor’s veto and we still don’t have a comprehensive data privacy bill in place. And meanwhile, as technology develops, as AI.

Charity: Gets more sophisticated, more widespread, more than ever. I think we need more protection. So that’s kind of the status of things in Vermont.

Beau: All right. So, uh, what’s your wishlist for the next year on that, on the privacy front for Vermont?

Charity: I would love to see protections, specific protections. ’cause we, of course we have the Consumer Protection Act, but it’s, it’s a broad act. So I would like specific protections to protect our biometric data, data about ourselves. We can’t change. Our DNA, our fingerprints, our face, our eyes, stuff like that. In addition, I’m ready for movement on ai.

Charity: I feel like there’s a lot of other elements of a data privacy bill that we’ve looked at in the past that we’ve been in favor of in the past, but the truth is we sort of missed our window because now we have to spend our time on. Bigger things, and to me that’s ai. I think that there is a lack of imagination when it comes to some of the challenges with ai.

Charity: And conveniently other places have created some examples for us to use and that’s always a nice thing to see, oh, what they do in this state or in this other country, or. Federalist system like the eu. You know, that’s a nice place to look and say, is that working for them? You know, how are we the same? How are we different?

Charity: How are our values the same or different? So continuing the education so that we can be thinking about meaningful protections for ai.

Transition

Beau: Let’s say that an Attorney General decided, I don’t want this job anymore. So like I, I’m gonna throw the next election. Um, how about just making all these social media platforms that are wreaking havoc on our privacy banned in a state? How about just saying you can’t do business in this state if you’re going to be doing what you’re doing. And why isn’t, and and that’s the thing is like we’re all implicit in it. I’m gonna just like paint the picture for you, charity, and I want to hear your response. ’cause I don’t think there’s an answer and I don’t think that that’s the right move. Um, the pe, the Coldplay couple that got identified, they were identified by other citizens.

Beau: They weren’t identified by their family, like you pointed out. They were just, we’re all, I think participants. This destruction of our own privacy

Charity: Yes.

Beau: and our own privacy. So let’s say like, I mean, if you want to talk about passing a lot, I like ban, ban social media. Like I will, I guess I’ll help you lose your job.

Beau: But the, um, but the, but like, how about something probably more doable, which is getting people to, to understand what the stakes are. When they do post their information and post things about their lives.

Charity: I think that’s a much better approach. I mean, especially, I’ll say here in Vermont, we don’t have a lot of newspapers left, and so Facebook actually serves as an important media source for people. I mean, every community has their own kind of Facebook and if you want to reach that community, I, I was advertising.

Charity: On Facebook when I ran for office in 2022, the first time, because I knew there were certain communities, they didn’t have a newspaper. That’s where people got the news. So they actually serve a, a great purpose in the, in the process, I think. I don’t agree with the choices that they have made regarding privacy.

Charity: But you nailed it when you said that, that we are participants in the, you know. Destruction, removal, risk of our own privacy. And that’s what I want to stop. I just think, how can you be free if you’re being surveilled? And the thing about it is we allow private companies to do things we would never tolerate our government doing, and then they sell their products to the government to surveil us, you know?

Charity: I just don’t agree with that. I feel like people should just go out in the world and be free and they should be able to go to a concert and not feel like they’re being surveilled, you know? And that could just be like getting NAD on by their fellow concert goers. Like everybody should just mind their own business and be free

Outro

Beau: Charity Clark Vermont’s attorney General. Thank you so much for joining us.

Charity: Thanks for having me. It’s been fun.

TFS

And now it’s time for our tinfoil swan, our paranoid takeaway to keep you safe on and offline. So if you are worried about your privacy, and you don’t know if you should be opting in to scheduling apps for your children’s scheduler as one of them, there are many PupilPath.

You, you need to get in touch with the administrators of your school. Now, you may think this is gonna cause problems, and it might because it does create an extra layer of work. But read up on what these student tracking apps and the student scheduling apps and the student grade apps are all the same thing.

Read up on on their privacy policies. They’re not great. Now you have the right to not participate in the defeat of your kids’ privacy, right? You do. Call your school up. Ask them to backpack notes. That is the most important thing you can do to give your child some agency in the entire lifeline of their privacy, because if they lose it when they’re young, before they have the ability to say yes or no before they have the ability to understand it really is that fair?

Stay safe out there and have a great week.

Our privacy advisors:

- Continuously find and remove your sensitive data online

- Stop companies from selling your data – all year long

- Have removed 35M+ records

of personal data from the web

news?

Exclusive Listener Offer

What The Hack brings you the stories and insights about digital privacy. DeleteMe is our premium privacy service that removes you from more than 750 data brokers like Whitepages, Spokeo, BeenVerified, plus many more.

As a WTH listener, get an exclusive 20% off any plan with code: WTH.