Incognito — May 2025: Understanding AI, Risks, and How to Protect Your Privacy

Laura Martisiute

Reading time: 14 minutes

Welcome to the May 2025 issue of Incognito, the monthly newsletter from DeleteMe that keeps you posted on all things privacy and security.

Here’s what we’re talking about this month:

- AI. The different types, risks, and privacy precautions you can take.

- Recommended reads, including “Hertz Customer Data Breached.”

- Q&A: How can I see if there are any AI-generated/manipulated photos of me online.

We’re All Talking About “Weak AI.” Literally.

When you hear someone talk about “AI,” what exactly are they talking about?

Right now, it’s “narrow AI.”

Also known as “weak AI,” narrow AI is a type of artificial intelligence that can perform a specific task or a narrow range of tasks. Weak AI tools do not possess general intelligence or human-like understanding. They are highly specialized and trained for one purpose.

Think:

- Chatbots that are designed to respond to your queries (like ChatGPT).

- Spam filters (detecting and blocking spam emails).

- Facial recognition (recognizing people in photos).

- Netflix recommendation system (making recommendations based on your history).

- Tesla Autopilot (assisting with driving under certain conditions).

- Various voice assistants (responding to voice commands).

Gen AI, LLMs, Agentic AI… What’s the Difference?

There are several different “types” of AI technologies used today, all of which fall under “narrow AI.”

Generative AI (Gen AI) are tools designed for specific tasks, e.g., DALL·E and Midjourney. These systems are powerful but limited to the functions they were trained for, such as generating code, text, or images.

Large Language Models (LLMs) are a subset of Gen AI, focused on understanding and generating human language specifically. Examples include ChatGPT, Claude, and Meta’s LLaMA. They specialize in tasks like answering questions, summarizing content, and writing text.

Agentic AI refers to systems that can autonomously make decisions, set goals, and execute tasks across different domains. While a precise definition is still evolving, these tools are proactive, link between models and other applications (like software on your PC or applications in your home), and can operate without constant user prompts.

An agentic AI might do something like constantly scan the weather forecast and your calendar and prompt you to rethink your plans for that outdoor family event next week when a storm looks likely.

They may interact with APIs, tools, or environments to complete complex objectives. A current example is AutoGPT. Today’s agentic AIs are still considered weak AI.

What if we went beyond “weak AI”? We’d encounter Artificial General Intelligence (AGI). This kind of AI can handle any intellectual task a human can without much or any training. But it doesn’t exist yet.

So, when we talk about AI today, we are referring to “narrow AI” – mostly gen AI tools.

Whatever Type of AI You Interact With, the Risks Are Still the Same

In our March 2024 Incognito issue, we discussed the privacy risks of gen AI.

At the time, we said:

“The data you share typically becomes part of the service’s LLM data set, and there is no knowing where it will end up or who will see it.

We’ve already seen a bug expose (some) ChatGPT users’ conversation histories; security researchers have proved that OpenAI’s custom chatbots (GPTs) can be manipulated to leak training information and other private data; and both ChatGPT and Microsoft Bing say human AI trainers can monitor user conversations – which may or may not be a big deal (insider threats are as big a risk as ever).”

All of that is still true.

Just last month, it was reported that some ChatGPT answers were swapped between different users.

And in March, Wired reported that the security researcher Jeremiah Fowler discovered an unsecured database left open online by the AI image-generation company GenNomis without encryption or password protection, revealing not only the outputs but also the AI prompts used to create them.

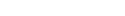

Think Twice Before Making That AI-Generated Action Figure

You’ve likely seen friends, family, and the people you work with turn themselves into AI dolls in the last few weeks. Maybe you’ve even done the same?

Creating AI-generated action figures (or any other image-generated fun) involves sharing a lot of personal data with an AI model, including a photo (ideally a full body photo) and other identifying details, like your hobbies, job, personality, etc.

When you stop to think about how much information you’re sharing, you can see the issue. But the problem is that it doesn’t feel like a privacy issue while you’re doing it.

When trying to justify sharing personal data to create a funny image to amuse a friend, you might think that all their data was already online or in the AI’s database somewhere.

Well, according to the researcher Luiza Jarovsky, it might not be. And giving personal data straight to LLMs might be exactly what their developers want you to do.

Jarovsky calls trends like the recent AI action figure boom a “clever privacy trick” and a way for AI companies to get free access to thousands of new faces to train their AI.

Here’s why:

- In jurisdictions like the EU and various US states with strict privacy laws, personal data is protected. To get personally identifiable data like photos, OpenAI must follow strict rules, but if users upload images themselves, they essentially give consent. This lets OpenAI use any uploaded data more freely, including to train AI.

- When people willingly upload fresh, often private images to ChatGPT for fun, they give OpenAI exclusive access to original photos that other platforms only see in edited form (unless you opt out).

How might this information you share with AI models be used, and by whom? No one knows.

“The detailed personal and behavioral profiles that tools like ChatGPT can create using this information could influence critical aspects of your life – including insurance coverage, lending terms, surveillance, profiling, intelligence gathering or targeted attacks,” said Eamonn Maguire, Head of Account Security at Proton in a Techradar article.

At DeleteMe, we predict that AI tools might be the next step in hyper-personalized advertising.

Google has already been experimenting with ads in AI overviews.

Plus, just last month, Aravind Srinivas, CEO of AI search engine Perplexity, said that his company will “use all the context (provided by user queries) to build a better user profile and, maybe you know, through our discover feed we could show some ads there.”

(More) AI features…Coming Soon

Some AI-related things that are currently in the works or are already being rolled out:

- OpenAI is reportedly building a social media platform. Should you join? Only if you want to further share your data with AI. According to publications like Gizmodo, that’s the only reason the social media platform is in the works.

- Perplexity is building its own browser. Because… you guessed it, it wants more of your data. Perplexity CEO said: “We want to get data even outside the app to better understand you. Because some of the prompts that people do in these AIs is purely work-related. It’s not like that’s personal.”

- Microsoft Recall is back. The controversial AI feature that logs everything you do on your Windows PC is rolling out gradually over the next few weeks. You don’t have to use it, of course, but if your family or friends do and you share something sensitive with them, then that will be logged.

AI Is Now Used By Everyone – Especially the Bad Guys

We’ve talked about AI-enhanced phishing emails, scam calls, and deepfake videos before. But what about the stuff you encounter every day on the internet?

In 2024, Google blocked 5.1 billion ads and suspended more than 700,000 advertiser accounts for violating policies related to AI-driven impersonation scams.

- It’s not just Google. Social media sites like Facebook are also rampant with deepfake ads.

- Despite their best efforts, these platforms don’t seem to be able to keep up.

True or false: The “Pro Power Save” device is endorsed by Elon Musk.

Answer: False! Musk is just one of the many celebrities whose image is being used to promote scams.

Also: Anyone can use AI to harm you. Last month, a former high school athletics director who used AI to create a racist and antisemitic deepfake of a Maryland principal was sentenced to four months in jail under a plea deal for disrupting school operations.

What’s the Future of AI?

Right now, agentic AI is hyped as the next leap in AI development.

But as you can imagine, experts are concerned about the privacy risks associated with agentic AI.

For example, Signal president Meredith Whittaker pointed out that AI agents need near-root access to sensitive data: browsers, credit cards, calendars, and messaging apps. Most agent tasks also require unencrypted access, with processing done in the cloud, increasing your exposure to breaches.

How to Use AI Privately

The first thing to note is that AI companies don’t want you to use AI privately.

The CEO of OpenAI, Sam Altman, openly said that because AI is evolving so quickly, privacy safeguards can’t be put into place until problems emerge, so our advice is to take precautions if you use AI tools.

Avoid sharing personal info: Don’t input sensitive data like your real name, address, passwords, or financial information into AI.

Use anonymous accounts: When possible, use burner or pseudonymous email addresses and logins for AI tools.

Clear chat histories: If the AI platform allows, regularly delete your chat or search history.

Turn off personalization features: Opt out of features that “remember” you to avoid data being stored for tailored responses.

Read privacy policies: Understand how the AI tool stores, uses, and shares your data — especially whether your input can be used for training models. See this tech.co article about the AI apps that collect the most user data (and what they do with it).

Use open-source or local models: Run models on your own machine using tools like LLaMA, GPT4All, or private GPT wrappers.

Readers’ Tips

Once in a while, we share tips from our readers.

Last month, we answered the question, “Does dumpster diving still happen?” The answer was yes, and our advice was to get a paper shredder.

One of our readers wrote in with fantastic advice:

“Everyone doesn’t have a paper shredder. So here are a few low cost alternatives.

Use a black marker pen to hide your information.

If you have a wood stove, or you burn brush, save those papers with your identifying information to start your fires with. You can also burn them in a charcoal grill, if you have one.

If you do shred your papers, you can use the shreds as a first layer around plants to make your mulch go further.

We’re all looking for ways to save money nowadays. So before you run out and buy a shredder, think about doing the things I suggested above. And hey, you can always use your hands to tear things into small pieces! That can be a fun activity for small kids, too!”

We’d Love to Hear Your Privacy Stories, Advice and Requests

Do you have any privacy-related dating app experiences you’d like to share? Have you ever been targeted with a romance scam? And how did you spot it for what it was?

Also, do you have any privacy stories you’d like to share or ideas on what you’d like to see in Incognito going forward?

Don’t keep them private!

We’d really love to hear from you this year. Drop me a line at laura.martisiute@joindeleteme.com.

I’m also keen to hear any feedback you have about this newsletter.

Recommended Reads

Our recent favorites to keep you up to date in today’s digital privacy landscape.

Record $16.6 Billion Lost to Cybercrime In 2024, Says FBI

The FBI reported a record $16.6 billion in US cybercrime losses in 2024, mostly from fraud, with older Americans hit hardest. The organization also recently put out an alert warning consumers that scammers are impersonating IC3 employees to trick fraud victims into handing over more personal and financial information under the guise of recovering lost funds.

Hertz Customer Data Breached

Hertz notified customers of a data breach caused by a cyberattack on its vendor, Cleo, between October and December 2024. The stolen data includes names, birth dates, contact information, driver’s licenses, payment card details, and, for some, Social Security numbers. The breach affects customers in the US, Australia, Canada, New Zealand, the UK, and the EU.

WhatsApp Introduces ‘Advanced Chat Privacy’ Feature

WhatsApp released ‘Advanced Chat Privacy,’ a new feature that prevents exporting chats, auto-downloading media, and using messages for AI features. This feature can be enabled in private chats and group conversations. Though it helps protect sensitive conversations, content can still be captured through other methods like taking photos.

Trump Moves to Merge Government Data

The Trump administration, led by Elon Musk’s Department of Government Efficiency (DOGE), is moving to consolidate government databases containing sensitive personal information about Americans. Officials say this will help uncover waste and fraud. Privacy advocates worry it could enable political abuse, create security risks, and violate long-standing privacy laws.

You Asked, We Answered

Here are some of the questions our readers asked us last month.

Q: Should I use a random name for everything I do online? And how can I create these names and keep track of them?

A: Depends on what you’re doing online and your tolerance for managing multiple identities and the potential headaches that come with it.

Generally, it’s recommended to use random names on forums, social media sites, and other online accounts you’re not sure about, or if you’re talking about controversial/sensitive topics.

It’s not recommended to use random names on LinkedIn (and other sites where you have a professional presence), government services, banks, etc.

To generate a random name, you can use a random name generator like https://www.fakenamegenerator.com/gen-random-us-us.php.

There are also browser extensions that can fill form inputs with fake data (though they’re mostly used by developers for quick testing).

When it’s not strictly required, don’t include a name, just a username. Your username should also be unique on every account you use.

The ideal scenario is the following:

- Unique username.

- Masked email address.

- Unique name (if needed).

The best way to create and manage the above is with a password manager – most of them have a feature for creating aliases and optional fields where you can store your random name (and other details you want to remember).

Q: I’ve been hearing of people finding AI-generated/manipulated photos of them. How can I check that there isn’t anything like this of me on the web?

A: Great idea, and something that everyone should do once in a while as part of their digital footprint management.

The easiest way to check is through reverse image search: For example, in Google Images, you can upload your photo or drag-and-drop it to see where it and similar photos (including AI-manipulated ones) appear online.

If possible, use a photo already on the web for privacy reasons.

Back to You

We’d love to hear your thoughts about all things data privacy.

Get in touch with us. We love getting emails from our readers (or tweet us @DeleteMe).

Don’t forget to share! If you know someone who might enjoy learning more about data privacy, feel free to forward them this newsletter. If you’d like to subscribe to the newsletter, use this link.

Let us know. Are there any specific data privacy topics you’d like us to explore in the upcoming issues of Incognito?

That’s it for this issue of Incognito. Stay safe, and we’ll see you in your inbox next month.

Don’t have the time?

DeleteMe is our premium privacy service that removes you from more than 750 data brokers like Whitepages, Spokeo, BeenVerified, plus many more.

Save 10% on DeleteMe when you use the code BLOG10.